Intro to the Core Rule Set

OWASP ModSecurity Core Rule Set (CRS) is a set of attack and anomaly detection rules to protect web applications. The CRS in its latest versions uses different levels of rule strictness denominated “Paranoia Levels”, starting with the basic and must have rules that have low false positive rate and going up the ladder it increases the false positive rate and reduces the type of acceptable payloads.

Paranoia levels (PL) are accumulative. In current 3.1 dev version, at level zero, there are 77 rules (most configuration rules). The PL1 may run up to 197 rules plus all rules enabled from level zero. There are all previous 274 rules plus 84 additional rules, in PL2. PL3 may run all previous 358 rules plus 53 additional rules. Finally, PL4 includes all previous 411 rules plus 58 additional rules to total 469 rules.

Another interesting feature in the CRS is the anomaly thresholds; there are inbound and outbound thresholds that will deny a transaction if the transaction anomaly score exceeds the declared maximum values. The CRS can be configured to deny transactions immediately when a rule is triggered or when the anomaly scores are evaluated.

Picking the right CRS settings

For most web applications running the basic set of rules included in PL1 is enough and it has the benefit of very low false positive rates, so the effort required to protect the web application, some of the rules in level one have sibling rules with stricter settings in upper levels.

The paranoia level and anomaly values and thresholds should be selected depending on the nature of the web application, the complexity of the contents being exchanged and the sensitivity of the data, using PL1 would suffice for most compliance use cases and when fast implementation, low false positive rates, and performance are of utmost importance. PL4 is a better choice if the web application handles financial transactions, personal data or critical operations when having a performance hit and a lot of rule tailoring is less impacting than having the data or the application compromised by an attacker.

PL1 should be considered as a baseline or a bare minimum configuration, PL2 is a good candidate for applications handling data of some value, PL3 is a good pick for protecting sensitive, financial and personal data and it is also recommended for those applications handling complex payloads. The PL4 will accept only a limited set of characters and have little tolerance to non-alphanumeric, repetitive, and words with special meaning.

CRS from zero

Once the CRS is installed, there are several settings to adjust, in the crs-setup.conf file is where the general behavior of the WAF is configured; there are the SecDefaultAction directives that will define if a transaction is denied by a single rule hit or until after all rules are evaluated. In the crs-setup.conf file is also where the paranoia level, anomaly scores per criticality and blocking threshold are defined. This file also contains the transaction limits definitions and features (lengths, types, DoS, reputation checks).

The combination of PL and anomaly thresholds and scores must be aligned to the web application needs; the image below illustrates the differences between website types and the settings used for paranoia level and anomaly thresholds.

Figure 1: Anomaly Threshold / Paranoia Level Quadrant

Items to configure

- Paranoia level

- Anomaly score values

- Anomaly limits (thresholds)

- CMS whitelists

- Allowed HTTP methods

- Allowed content-types

- Allowed HTTP versions

- Restricted file extensions

- Restricted headers

- Static file extensions

- Maximum element lengths

- Transaction sampling percentage

- Active defense and GeoIP

- Denial of Service

- Validate UTF-8

- Reputation checks

- CRS version and debugging

tx.paranoia_level=1tx.critical_anomaly_score=5

tx.error_anomaly_score=4

tx.warning_anomaly_score=3

tx.notice_anomaly_score=2tx.inbound_anomaly_score_threshold=5

tx.outbound_anomaly_score_threshold=4SecRule REQUEST_URI "@beginsWith /wordpress/"

tx.crs_exclusions_wordpress=1

tx.crs_exclusions_drupal=1

tx.crs_exclusions_wordpress=1tx.allowed_methods=GET HEAD POST OPTIONStx.allowed_request_content_type=application/x-www-form-urlencoded|multipart/form-data|text/xml|application/xml|application/soap+xml|application/x-amf|application/json|application/octet-stream|text/plaintx.allowed_http_versions=HTTP/1.0 HTTP/1.1 HTTP/2 HTTP/2.0tx.restricted_extensions=.asa/ .asax/ .ascx/ .axd/ .backup/ .bak/ .bat/ .cdx/ .cer/ .cfg/ .cmd/ .com/ .config/ .conf/ .cs/ .csproj/ .csr/ .dat/ .db/ .dbf/ .dll/ .dos/ .htr/ .htw/ .ida/ .idc/ .idq/ .inc/ .ini/ .key/ .licx/ .lnk/ .log/ .mdb/ .old/ .pass/ .pdb/ .pol/ .printer/ .pwd/ .resources/ .resx/ .sql/ .sys/ .vb/ .vbs/ .vbproj/ .vsdisco/ .webinfo/ .xsd/ .xsx/tx.restricted_headers=/proxy/ /lock-token/ /content-range/ /translate/ /if/tx.static_extensions=/.jpg/ /.jpeg/ /.png/ /.gif/ /.js/ /.css/ /.ico/ /.svg/ /.webp/tx.max_num_args=255

tx.arg_name_length=100

tx.arg_length=400

tx.total_arg_length=64000

tx.max_file_size=1048576

tx.combined_file_sizes=1048576tx.sampling_percentage=100tx.block_search_ip=1

tx.block_suspicious_ip=1

tx.block_spammer_ip=1

tx.block_harvester_ip=1

tx.high_risk_country_codes=UA ID YU LT EG RO BG TR RU PK MY CN

tx.high_risk_country_codes=tx.dos_burst_time_slice=60

tx.dos_counter_threshold=100

tx.dos_block_timeout=600tx.crs_validate_utf8_encoding=1tx.do_reput_block=1

tx.reput_block_duration=300tx.crs_debug_mode=1

tx.crs_setup_version=302Exception handling

Some events require the CRS to be tunned to make an application work, in particular when an application request or response contains elements that are legitimate for its processing but the contents match a rule. Not all web applications are 100% compatible with the CRS, especially in the upper paranoia levels. In PL4, CRS tunning is almost mandatory as most applications will break partially or completely due to several very strict rules.

ModSecurity logs mention what causes the rule to trigger, what rule was triggered, and the element that contains the matching payload. There is an interesting post from Bryan Barnett about exception handling at the SpiderLabs blog that worth reading.

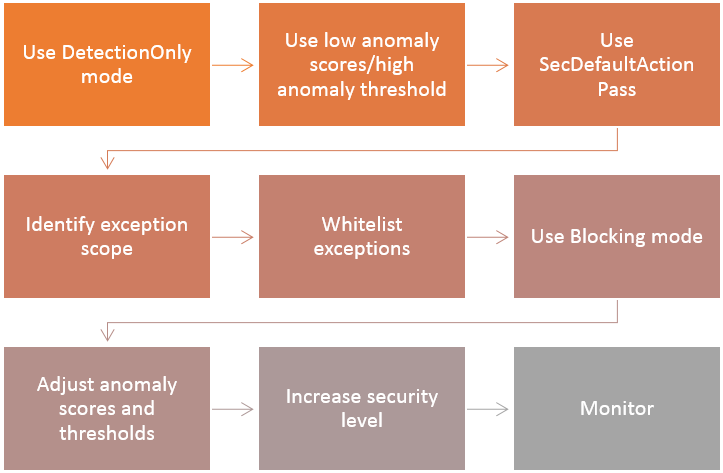

It is often a good idea to adapt the CRS to protect a web application gradually, starting with relaxed settings and increase the security as the false positives are addressed to prevent having thousands of alerts and an unusable application. This process should be repeated to iterate until achieving the desired level of protection, performance and usability.

Figure 2: Tips for tailoring the ruleset

The process of adopting the CRS must be continuous; every application change may introduce new false positives and malfunctions same as every update on the CRS. This continuous cycle is important because it is possible to have false negatives, often caused by new vulnerabilities and attacks that are not be detected or that slip through the exceptions.

Tuning the CRS can be done in three phases:

- Discovering false positives and anomalies

- Adjusting whitelist exceptions and positive validation

- Testing and monitoring

Discovering false positives and anomalies

DetectionOnly

ModSecurity can be configured to block transactions or to alert only in case of attacks. Set the engine in detection only mode with SecRuleEngine DetectionOnly, this makes the ModSecurity process the transactions but will prevent it from performing disruptive actions (e.g., block, deny, drop, allow, proxy and redirect). This operation mode allows the web application to send and receive data and log the events when a rule triggers.

Once all false positives are treated in detection only mode, the engine must be switched to blocking mode SecRuleEngine On to start blocking attacks; additional rule tuning may be required in this mode.

Anomaly score/thresholds for reducing service disruptions

There are multiple anomaly scores counters, increments, and thresholds, that define a number of points the anomaly score will be increased and the maximum score before a transaction is denied.

The scores are configured at crs-setup.conf, and the default scores per severity level are as follows:

- CRITICAL severity: Anomaly Score of 5.

- ERROR severity: Anomaly Score of 4.

- WARNING severity: Anomaly Score of 3.

- NOTICE severity: Anomaly Score of 2.

The CRS may track scores as per attack type and the total values that are used either while debugging or to use for rule verification. Some of the scores are:

- total=%{anomaly_score}e

- sqli=%{sql_injection_score}e

- xss=%{xss_score}e

- rfi=%{rfi_score}e

- lfi=%{lfi_score}e

- rce=%{rce_score}e

- php=%{php_injection_score}e

- http=%{http_violation_score}e

- ses=%{session_fixation_score}e

The defined thresholds are checked for incoming requests and outgoing responses. The anomaly scores are per transaction, and if a given transaction reaches the threshold, ModSecurity will forbid the transaction. By default the anomaly values are low, and during the initial tuning phase it is better to increase those values to a very high limit (e.g., 100X) to reduce the request rejections as much as possible.

The variables controlling the thresholds are:

- tx.inbound_anomaly_score_threshold=5

- tx.outbound_anomaly_score_threshold=4

SecDefaultAction

This directive defines the default list of actions, which will be inherited by the rules in the same configuration context and phase. Configuration contexts are an Apache feature; the context is the scope to which the inheritance apply for example virtual host or location levels, there is an important constraint, phase 1 actions will only be processed in the virtual host context and will be discarded in location context due to an Apache configuration limitation.

During the early tuning period and until the configuration is stable it is easier to set it to SecDefaultAction phase:2,log,auditlog,pass and once the tuning is stable switch it to SecDefaultAction phase:2,log,auditlog,deny.

Adjusting whitelist exceptions and positive validation

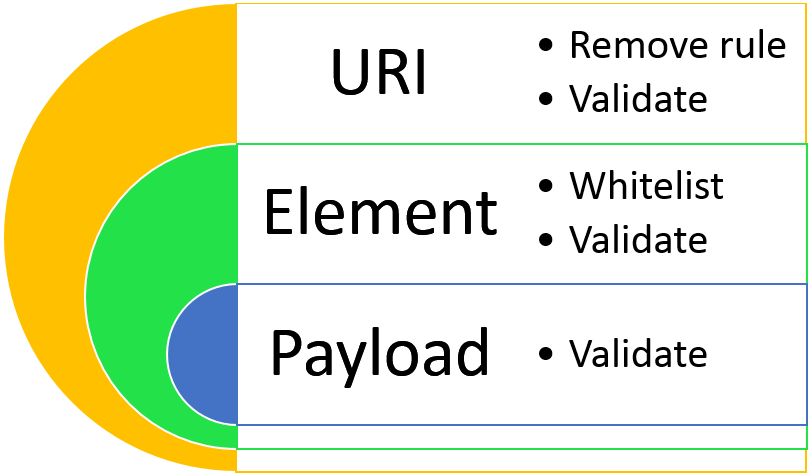

Once ModSecurity is generating alerts without disrupting the application flow, it is easier to identify to which extent an exception is present in the application. Some elements such as cookies are sent in every request to the same fqdn/ip+port. Usually, cookies will reach the entire website unless it contains a path limitation which will reduce the scope to only a given URI and all its contents.

Figure 3: Scope

Identify exception scope

Tuning the CRS in many cases will require to whitelist elements from being inspected and even some drastic measures such as entirely removing a rule, this is like having a protecting shield and opening holes here and there for whatever reason. Opening too many or too big holes will turn the application shield into a gruyere or a donut. There must exist a balance between usability and security.

A common example would be an application that sends a get parameter containing special characters to a single page as in the extract below:

$ grep "confirm(1)" audit.log |tail -1|python -c 'import json,sys;log=json.load(sys.stdin); print log["request"]["request_line"]; print log["audit_data"]["messages"];'

GET /test_area/edit.php?x=confirm%281%29 HTTP/1.1

[u'Warning. Found 2 byte(s) in ARGS:x outside range: 38,44-46,48-58,61,65-90,95,97-122. [file "mod_perl"] [line "304"]

[id "920273"] [rev "2"] [msg "Invalid character in request (outside of very strict set)"] [severity "CRITICAL"] [ver "OWASP_CRS/3.0.2"] [maturity "9"] [accuracy "9"] [tag "application-multi"] [tag "language-multi"] [tag "platform-multi"] [tag "attack-protocol"] [tag "OWASP_CRS/PROTOCOL_VIOLATION/EVASION"] [tag "paranoia-level/4"]',

u'Warning. Pattern match "((?:[\\\\~\\\\!\\\\@\\\\#\\\\$\\\\%\\\\^\\\\&\\\\*\\\\(\\\\)\\\\-\\\\+\\\\=\\\\{\\\\}\\\\[\\\\]\\\\|\\\\:\\\\;\\"\\\\\'\\\\\\xc2\\xb4\\\\\\xe2\\x80\\x99\\\\\\xe2\\x80\\x98\\\\`\\\\<\\\\>][^\\\\~\\\\!\\\\@\\\\#\\\\$\\\\%\\\\^\\\\&\\\\*\\\\(\\\\)\\\\-\\\\+\\\\=\\\\{\\\\}\\\\[\\\\]\\\\|\\\\:\\\\;\\"\\\\\'\\\\\\xc2\\xb4\\\\\\xe2\\x80\\x99\\\\\\xe2\\x80\\x98\\\\`\\\\<\\\\>]*?){2})" at ARGS:x. [file "mod_perl"] [line "488"]

[id "942432"] [rev "2"] [msg "Restricted SQL Character Anomaly Detection (args): # of special characters exceeded (2)"] [data "Matched Data: (1) found within ARGS:x: confirm(1)"] [severity "WARNING"] [ver "OWASP_CRS/3.0.2"] [maturity "9"] [accuracy "8"] [tag "application-multi"] [tag "language-multi"] [tag "platform-multi"] [tag "attack-sqli"] [tag "OWASP_CRS/WEB_ATTACK/SQL_INJECTION"] [tag "WASCTC/WASC-19"] [tag "OWASP_TOP_10/A1"] [tag "OWASP_AppSensor/CIE1"] [tag "PCI/6.5.2"] [tag "paranoia-level/4"]']In the example we need the application to be able to send a get argument called “x” containing “confirm(1)” to the /test_area/edit.php URI. The particular payload triggered two rules 920273 because the ascii values of the parenteses are not on the list, and 942432 because there are two or more blacklisted characters in the payload.

To have the application working it is possible to address the issue in multiple ways, just to name a few, here are some variants ordered by scope from widest to narrow:

- Comment the rules 920273 and 942432 from the configuration files

- Disable the rules

SecRuleRemoveById 920273 942432 - Whitelist the x argument from those rules

SecRuleUpdateTargetById 920273 !ARGS:xSecRuleUpdateTargetById 942432 !ARGS:x - Remove the rules if the URI matches

- ModSecurity runtime

SecRule REQUEST_URI "@rx ^/test_area/edit.php$" "id:1,pass,ctl:ruleRemoveById=920273,ctl:ruleRemoveById=942432" - Location block

<location /test_area/edit.php>SecRuleRemoveById 920273 942432</location> - LocationMatch block

<locationMatch ^/test_area/edit.php$>SecRuleRemoveById 920273 942432</location>

- ModSecurity runtime

SecRule REQUEST_URI "@rx ^/test_area/edit.php$" "id:1,pass,ctl:ruleRemoveTargetById=920273;ARGS:x,ctl:ruleRemoveTargetById=942432;ARGS:x"SecRule REQUEST_URI "@rx ^/test_area/edit.php$" "chain,id:1,pass,ctl:ruleRemoveTargetById=920273;ARGS:x,ctl:ruleRemoveTargetById=942432;ARGS:x"

SecRule ARGS:x "@streq confirm(1)"ModSecurity allows many ways to achieve the same result, and even several variables may be used to get the required info. In the previous example, REQUEST_URI was used, but the QUERY_STRING, REQUEST_BASENAME, REQUEST_FILENAME, REQUEST_LINE, REQUEST_URI, and REQUEST_URI_RAWvariables contain variants of the same info.

All the example rules will get the application to work but have different scopes. For quick results, if security is not a concern using a wide scope is easier and faster. For security purposes, the most focused and restricting the ruleset is the better. It will require more effort and time to create the perfect ruleset, but it will make our application more resilient to attacks and false negatives.

Blocking mode

ModSecurity normal operation mode is set using SecRuleEngine On which can perform disruptive actions on the transactions. Once the false positive rates reach an acceptable level, it is time to enable it so the CRS can do its job properly.

Anomaly score/thresholds for preventing attacks

Once the false positive rates are in acceptable levels, the anomaly scores and inbound and outbound thresholds must be adjusted to a level where casual low severity warnings that arise do not block the requests or cripple the application and that successfully reject attacks and malformed requests. Usually, the CRS will increase the anomaly scores using critical scores when there is a clear indication of attack and notice or warning scores for small anomalies. The crs-setup.conf file holding the variables with the scores and thresholds have to be adjusted to fit the regular application usage.

One example would be a website that uses IP address instead of hostnames which clients use mobile devices, often mobile devices especially those using outdated operating system versions will not send common headers on the requests that the CRS would expect such as the ACCEPT or USER-AGENT headers. The 92350 rule will increase the anomaly score using warning score (3), the 920320 rule will increase the anomaly score using notice score (2), and the 920300 rule will also use notice score (2). That would result in a total anomaly score of 7 which exceeds the inbound anomaly score threshold which will cause the 949110 rule to deny a completely normal request for that site.

Given the example the inbound_anomaly_score_threshold must be changed to be greater than 7, if the need is to deny requests that have one or more critical severity rules triggered then the threshold must be set to 13 which equal the ever-present anomaly score of 7 plus 5 of a single critical anomaly. This method is not perfect for another client which correctly sets the ACCEPT, and USER-AGENT headers will be able to smuggle a critical and a warning event without being blocked. In this case, as most clients will not provide the ACCEPT and USER-AGENT headers it will make more sense to set those rules do not increase the score or simply disabling them.

Testing for improvements and monitoring the WAF

At this point of the process, the CRS configuration is stable, and a recurrent monitoring and testing processes have to be established with the purpose to finding false negatives, detect new attacks, increase the security level, and update the CRS version.

Increase the security level

If the desired level of security still not reached it is time to increase the paranoia level to the next level and start adding positive rules to accept only requests that contain validated payloads.

Increasing the paranoia level is a good option for those applications that use standard character sets, no complex payloads.

The best addition that can be done to the CRS is a custom rule set specific to the application which performs a positive validation on the different elements present on the application and allows only those that are expected.

Basic positive security checks (easily scripted, see):

- URI's

- Header names and values

- Cookie names and values

- Argument names and values

- Resource types

- Lengths

- Methods, protocols, encodings

Other advanced checks:

- User and application behavior

- Application logic

Monitoring

It is primordial to monitor the WAF logs for identifying attacks and service disruptions. ModSecurity logs in the Apache error log and on the ModSecurity audit log, the first is for short extract of events triggered; the second is for storing the entire request and response as well as all rule events and other errors.

It is also convenient to push all the logs to a centralized storage and use them to trigger alarms and correlate to other logs. A short introduction on how to use ELK to parse the audit log is here

Automating the process

There are a few tools to help to create rules and modsecurity rule whitelists, to name a few:

This simple whitelist generator bash script can build a configuration file to be imported into Apache. The resulting file will read the error log and generate Location blocks containing the list of rules to be removed. This strategy is not the best for security but can help configuring quickly noncritical applications. There is a more recent tool called auditlog2db by the same author that can read audit logs.

WAFme is a python tool that I use to generate the the whitelists quickly. WAFme uses the audit log and can generate modsecurity rules that whitelist the desired element. If an element is present in a configured number of different URI, it will whitelist the element for the entire application.

WAFme is intended for tailing live audit logs and generate the rules and exceptions to prevent ModSecurity from blocking the regular website/webapp usability.

To use the tool:

- Clone it from github

- Configure the OUTPUT_FILE, audit.log location, exceptions and other global variables and web server restart script

- Navigate the website, use an automated test suite if available and press CTRL+C to generate the ruleset and reload, the output to the screen will include a requests python command that can be used to reproduce the same request. In many cases, the ruleset generation is a process that would require many iterations as once a rule blocks the request other elements and rules may not be processed yet.

- Test the navigation again until no denied requests are present and the website navigation is flawless.

Webappprofiler is a python tool that uses the auditlog or ZAP proxy to generate positive validation rules automatically, it generates location blocks and checks allowed methods, element names and values and generates regex to check the element values. I wrote a whitepaper including the tool usage and profiling web applications.

There are other virtual patching tools that can generate ModSecruity rules to prevent detected vulnerabilities from being exploited. Treadfix and zap2modsec perl script are examples that may be very useful.